Difference between pages "Code Generation Activity" and "Constrained Dynamic Parser"

imported>Andy |

imported>Pascal |

||

| Line 1: | Line 1: | ||

| − | + | {{TOCright}} | |

| − | + | This page describes the requirements for a '''generic parser''' for the [[Event-B Mathematical Language]]. | |

| − | This | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | A first design proposal is also drafted. | |

| − | |||

| − | |||

| − | |||

| + | == Requirements == | ||

| + | In order to be usable [[mathematical extensions]] require that the event-b mathematical language syntax can be extended by the final user. | ||

| − | + | The lexical analyser and the syntaxic parser thus have to be extensible in a simple enough way (from a user point of vue). | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | === Requirements Exported by the Current Language Design === |

| − | + | ==== Operator Priority ==== | |

| + | * operator are defined by group, | ||

| + | * group of operator have a defined precedences, | ||

| + | * there may be precedences defined inside groups. | ||

| − | * | + | ==== Operator Compatibility ==== |

| + | * a compatibility table defines allowed associativities inside a group, | ||

| + | * a compatibility table defines allowed associativities between groups (it allows to forbid a syntaxic construction like <math>f(x)^{-1}\;</math> | ||

| + | : nota: this requirement was added afterwards with consistency in mind. | ||

| − | + | === Expected Extension Schemes === | |

| + | We do want to at least define operators of the following form : | ||

| + | * infix : <math>a + b\;</math> or <math>a \vdash b : c\;</math> | ||

| + | * prefix : <math>\neg a\;</math> | ||

| + | * postfix : <math> R^*\;</math> | ||

| + | * closed : <math>\|a\|\;</math> | ||

| + | * parentheses sugar : <math>(a +b) * c\;</math> | ||

| + | * functional postfix : <math> a \langle b \rangle\;</math> | ||

| + | * functional prefix : <math> \langle b \rangle f\;</math> | ||

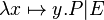

| + | * lambda like : <math> \lambda x\mapsto y . P | E\;</math> | ||

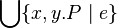

| + | * Union like : <math> \Union\{ e \mid P\}</math> or <math> \Union\{ x,y . P \mid e\}</math> | ||

| + | * sets : <math>\{a, b, c + e\}\;</math> or <math>\{ e \mid P\}\;</math> or <math>\{x,y . P \mid e\}\;</math> | ||

| − | + | We also like to define precisely precedences and associativity between existing and new operators. | |

| − | + | === Requirements exported by the dynamic feature === | |

| + | * the precedence should not be enumerated but defined by a relation, like: '+' < '*' and '*' < '**', ... | ||

| − | + | == Limitations == | |

| − | == | + | == Design Alternatives == |

| − | + | === Make Existing Parser Extensible === | |

| + | The existing parser is a LL recursive descent parser generated by the [http://www.ssw.uni-linz.ac.at/Coco/ Coco/R Compiler Generator], which makes extensive use of pre-computed lookahead, which makes it very difficult to be transformed in a sufficiently enough generic parser. | ||

| − | + | === Parser Combinator === | |

| + | :* on [http://en.wikipedia.org/wiki/Parser_combinator wikipedia]. Some implementations are referenced [http://pdos.csail.mit.edu/~baford/packrat/ here]. | ||

| + | :* with functionnal language [http://wiki.portal.chalmers.se/agda/Agda Agda] : ''[http://www.cs.nott.ac.uk/~nad/publications/danielsson-norell-mixfix.pdf Parsing Mixfix Operators] | ||

| + | :: This paper is interesting in its proposal of using an acyclic graph to define operator precedence. | ||

| + | :* with functional language [http://user.cs.tu-berlin.de/~opal/opal-language.html OPAL] : ''[http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=8A8A8B39FFBF1AEF17C782C4822C5AF6?doi=10.1.1.58.655&rep=rep1&type=url&i=0 How To Obtain Powerful Parsers That Are Elegant and Practical]'' | ||

| − | + | === Pratt Parser === | |

| + | :* [http://portal.acm.org/citation.cfm?id=512931 original paper from Vaughan Pratt] | ||

| + | :* [http://effbot.org/zone/tdop-index.htm SImple ''Pratt'' parsing in python] | ||

| + | :* [http://javascript.crockford.com/tdop/tdop.html ''Pratt'' parsing in javascript] | ||

| + | Note that a ''pratt'' parser is essentially a parser for expressions (where expression parts are themselves expressions)... But it may be tweaked to limit the accepted sub-expressions or to enforce some non-expression parts inside an expression. More on that hereafter. | ||

| − | + | === Some Existing Extensible Languages === | |

| + | * [http://www.chrisseaton.com/katahdin/ Katahdin], and the associated paper [http://www.chrisseaton.com/katahdin/katahdin-thesis.pdf A Programming Language Where the Syntax and Semantics Are Mutable at Runtime] which describes the language and its implementation (in C#). | ||

| + | * [http://itcentre.tvu.ac.uk/~clark/xmf.html XMF] whose implementation seems not be describes in details. | ||

| + | * [http://xlr.sourceforge.net/index.html XLR: Extensible Language and Runtime], which looks like a ''pratt'' parser according to the [http://xlr.svn.sourceforge.net/viewvc/xlr/trunk/xl2/xlr/parser.cpp?revision=805&view=markup sources]. | ||

| + | * [http://www.pi-programming.org/What.html <math>\pi</math>] whose implementation seems not be describes in details (but sources availables). | ||

| − | + | == Design Proposal == | |

| + | === Main Concepts === | ||

| + | ;symbol: a symbol is a lexem known by the parser. | ||

| + | ;group: each symbol belongs to one and only one group. | ||

| + | ;symbol compatibility: a relation telling if inside a group two symbol are compatibles. | ||

| + | :Two symbol are compatibles is they can be parsed without parentheses (see [[Event-B Mathematical Language]] for more on compatibility). | ||

| + | ;group compatibility: a relation telling if two groups are compatibles. | ||

| + | :Two symbol of different groups are said compatibles if their group are compatibles. | ||

| + | ;symbol associativity: a relation which gives the associative precedence of symbols inside a given group. | ||

| + | ;group associativity: a relation which gives the associative precedence of groups. | ||

| + | :Two symbol of different groups have a relative precedence given by the associative precedence of their groups. | ||

| + | ;generic parser: the parser which is defined. | ||

| + | : This parser may be called recursively (an infix expression being build upon a left and right sub-expression) and is often the default parser used when building the AST associated with a ''symbol''. | ||

| + | ;specific parser: some specific parser, predefined as helper functions to build specific AST. | ||

| + | :Some example of specific parser are the one used to parse and build list of identifiers (for quantified predicates), mapplets lists (for lambda), and so one... | ||

| − | + | === Proposed User Interface === | |

| + | ''The hereafter proposition does not preclude the final UI to take other (more user friendly forms). But the concepts should probably stay the same'' | ||

| − | + | We propose that the user will be able to create several predefined type of symbols (new types may certainly be further added by mean of eclipse extension points). When defining a symbol, the user can choose the parser used to parse the sub-expressions (default to generic parser, but a specific parser may be used) and can enforce the form of the resulting parsed sub-expression in terms of their AST root type. | |

| − | + | ==== Predefined Symbol Builders ==== | |

| + | The proposed predefined symbol builders are: | ||

| + | ;infix: For example predicate conjunction which expects two predicates for left and right parts may be defined by <code>infix("∧", gid="(logic pred)", expected_nonterm=["predicate", "predicate"])</code> | ||

| + | :As another example, substitution ≔ of group '(infix subst)' which expect two ''ident list'' for left and right expressions may be defined by <code>infix(symbol="≔", gid="(infix subst)", parsers=["ident_list", "ident_list"], expected_nonterm=["ident_list", "ident_list"])</code> | ||

| − | + | ;prefix: <code>prefix("¬", "(not pred)", expected_nonterm=["predicate"])</code> | |

| − | + | ;atomic: <code>atomic("⊤", "(atomic pred)")</code> | |

| − | |||

| − | |||

| − | |||

| − | + | ;prefix closed: used to build prefix symbol which must be followed by enclosing symbol(brackets, parentheses,..). For example <tt>finite</tt> may be defined by: <code>prefix_closed("finite", "(", ")","(atomic pred)", expected_nonterm=["expression"])</code> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | :postfix: <code>postfix("∼", gid="(unary relation)", expected_nonterm=["expression"])</code> | |

| − | |||

| − | |||

| − | |||

| − | = | + | ;quantified: <code>quantified("∀", "·", expected_nonterm=["predicate"]</code> |

| − | + | ;lambda like: <code>lambda_like("λ", "·", u"∣", gid="(quantification)", expected_nonterm=["ident list", "predicate", "expression"])</code> | |

| − | |||

| − | |||

| − | = | + | ;quantified union like: <code>Union_like("⋃", "·", "∣", gid="(quantification)")</code> |

| − | |||

| − | |||

| − | |||

| − | + | ;closed sugar: use for parentheses added to enforce associativity: <code>closed_sugar("(", ")")</code> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | == | + | ;functional postfix: <code>functional_postfix("(", ")", gid="(functional)", expected_nonterm=["expression", "expression"])</code> |

| − | |||

| − | + | Some right associative versions of those symbol builders may also be supplied (infix_right, or a specific parameter ''right associative'' parameter may also be provided to the ''infix'' symbol builder). | |

| − | |||

| + | ==== Predefined Specific Parser ==== | ||

| + | * expression lists | ||

| + | * ident lists | ||

| + | * maplet tree (for lambda) | ||

| − | |||

| − | + | ==== Defining Compatibility and Precedences ==== | |

| + | Inside a group, ''compatibility'' may be defined like: | ||

| + | <code> | ||

| + | g = group["(binop)"] | ||

| + | g.add_many_compatibility( | ||

| + | [ ("∪", "∪"), | ||

| + | ("∩", "∩"), | ||

| + | ("∩", "∖"), | ||

| + | ("∩", "▷"), | ||

| + | ("∩", "⩥"), | ||

| + | ("∘", "∘"), | ||

| + | (";", ";"), | ||

| + | (";", "▷"), | ||

| + | (";", "⩥"), | ||

| + | ("", ""), | ||

| + | ("◁", "∩"), | ||

| + | ("◁", "∖"), | ||

| + | ("◁", ";"), | ||

| + | ("◁", "⊗"), | ||

| + | ("◁", "▷"), | ||

| + | ("◁", "⩥"), | ||

| + | ("⩥", "∩"), | ||

| + | ("⩥", "∖"), | ||

| + | ("⩥", ";"), | ||

| + | ("⩥", "⊗"), | ||

| + | ("⩥", "▷"), | ||

| + | ("⩥", "⩥"), | ||

| + | ("×", "×"), ] | ||

| + | </code> | ||

| + | which defines the compatibility table for expression binary operator as defined in [[Event-B Mathematical Language]]. | ||

| − | |||

| − | + | Inside a group, ''precedence'' may be defined like: | |

| + | <code> | ||

| + | g = group["(arithmetic)"] | ||

| + | g.add_many_precedence( | ||

| + | [ ("^", "÷"), | ||

| + | ("^", "∗"), | ||

| + | ("^", "+"), | ||

| + | ("^", "−"), | ||

| + | ("^", "mod"), | ||

| + | ("+", "∗"), | ||

| + | ("−", "∗"), | ||

| + | ("+", "÷"), | ||

| + | ("−", "÷"), | ||

| + | ("+", "mod"), | ||

| + | ("−", "mod"), ] ) | ||

| + | </code> | ||

| + | which defines ^ as having the least precedence amongst the operators of the expression arithmetic group. | ||

| − | + | Similarly, precedences and compatibilities between groups may be defined : | |

| + | <code> | ||

| + | group_precedence.add(("(binop)", "(interval)")) | ||

| + | group_precedence.add(("(interval)", "(arithmetic)")) | ||

| + | group_precedence.add(("(arithmetic)", "(unary relation)")) | ||

| + | group_precedence.add(("(unary relation)", "(bound unary)")) | ||

| + | group_precedence.add(( "(bound unary)", "(bool)")) | ||

| − | + | group_compatibility.add_all( [ "(binop)", "(interval)", (arithmetic)", "(unary relation)", "(unary relation)", "(bound unary)",....]) | |

| + | </code> | ||

| − | ''' | + | ==== Some Difficulties ==== |

| + | Some difficulties appear when trying to implement an Event-B parser with the preceding scheme: | ||

| + | * A symbol may be declared by several different builders (think of unary and infix minus for example). | ||

| + | : ''Pratt'' parsers are able to cope with a symbol appearing either at the beginning of an expression or inside an expression (see ''led'' and ''nud'' hereafter). For example, unary minus and infix minus can be both defined by the proposed scheme. However, in their default form, ''pratt'' parser failed in parsing symbol like '{' which appears at the beginning of extension sets, comprehension sets and quantified sets. | ||

| + | : SOLUTION #1: The existing Event-B parser uses an infinite lookahead to determine which one of those three sets is actually being parsed. | ||

| + | : This solution hinders the extensibility of the parser, because the addition of a symbol requires to modify the lookahead. | ||

| + | : However, such an issue may be bypassed, at least in a first version, in enforcing some usage rules. Thus, a new symbol contributed by an extension but used in the lookahead may be rejected as in invalid symbol. | ||

| + | : SOLUTION #2: Implementing a backtracking parser. | ||

| + | : More precisely, it means that the three sets are attempted to be parsed. The parsing is successful if and only if one of these attempts lead to a result (''i.e''., it is a failure if several attempts or none lead to a result). | ||

| + | : It is a good solution provided that we use memorisation to alleviate the cost of parsing formulas that require heavy backtracking. | ||

| − | + | * Defining a grammar or a grammar extension is not an easy job, even with those simple mechanisms. | |

| − | + | : It would certainly be a good idea to implement a user interface to test the grammar, so that an extension designer has a way to ensure that he agrees with the parser on the semantic of his extension (or at least on the AST obtained by using it). For example, the representation of the syntax tree returned by <tt>Formula.getSyntaxTree</tt> could be compared to an expected entered string. | |

| − | ==== | + | === Core Algorithm === |

| + | ==== Main Objects ==== | ||

| + | ;{{class|KnownSymbols}}: a table associating a {{class|SymbolParser}} for each known symbol. | ||

| − | + | ;{{class|SymbolParser}}: a Parser for a specific symbol. It should at least have the following attributes: | |

| + | :* {{class|Array<Parser>}} {{ident|led}} for parsing the expression associated to the symbol {{ident|id}} when it appears in the middle of an expression (''left denotation'') | ||

| + | :* {{class|Array<Parser>}} {{ident|nud}} for parsing the expression associated to the symbol {{ident|id}} when it appears at the beginning of an expression (''null denotation'') | ||

| + | :* {{class|String}} {{ident|id}} | ||

| + | :* {{class|String}} {{ident|gid}} designing the group to which the {{ident|id}} symbol belongs to. | ||

| + | :Note that parsers in ''led'' (respectively ''nud'') will be tried one after another until one match (with backtracking to the original state in between). | ||

| − | + | ;{{class|Parser}}: A parser, which should have the following attributes | |

| + | :*{{class|Parser Array}} {{ident|subparsers}} an array of subparsers used by the {{ident|parse}} method. They defaults to {{ident|MainParser}}, but may be set to specific parsers. | ||

| + | :and the following methods: | ||

| + | :*{{ident|parse}}: this method should consume tokens and returns an AST. It may use several {{ident|subparsers}}. | ||

| − | + | ;{{class|ParserBuilder}}: the class that inherited from this class provide the interface used to create new symbols. It has the following method: | |

| + | :*{{ident|build}}: which build a SymbolParser and store it in the {{class|KnownSymbols}} container as being associated to the symbol for which the parser is being built. | ||

| + | ;{{class|MainParser}} | ||

| + | :This class inherits from {{class|Parser }} and its {{ident|parse}} function implements the core of the ''pratt'' algorithm. | ||

| − | [[Category: | + | :Without taking into account backtracking, the algorithm reads: |

| + | <blockquote><code><pre> | ||

| + | def parse(reckognized_token, has_right_associativity=False): | ||

| + | global token | ||

| + | t = token # t is the processed symbol | ||

| + | token = tokenizer.next() # token is the next symbol | ||

| + | left = t.nud() # a new expression is being parsed (null denotation : nothing on the left) | ||

| + | while has_less_priority(reckognized_token, token, has_right_associativity): | ||

| + | t = token | ||

| + | token = next_token() # if precedence allows it, we parse next expression | ||

| + | left = t.led(left) # has being left expression of current symbol (left denotation) | ||

| + | return left | ||

| + | </pre></code></blockquote> | ||

| + | |||

| + | :The parsing of a new expression is initiated by: | ||

| + | <blockquote><code><pre> | ||

| + | token = tokenizer.next() | ||

| + | ast = MainParser.parse(KnownSymbols["(eof)"]) | ||

| + | </pre></code></blockquote> | ||

| + | |||

| + | :where the {{ident|tokenizer}} returns the {{class|SymbolParser}} associated to the next lexem. | ||

| + | |||

| + | ;{{class|identListParser}}: inherits from {{class|Parser}}. Its {{ident|parse}} function implements the parsing of an ident list (like <math>a,b,c\;</math>). | ||

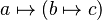

| + | ;{{class|identMapletListParser}}: inherits from {{class|Parser }}. Its {{ident|parse}} function implements the parsing of a maplet list as used in lambda expression (like <math>a \mapsto (b \mapsto c)\;</math>). | ||

| + | ;{{class|expressionListParser}}: inherits from {{class|Parser }}. Its {{ident|parse}} function implements a list of expression as used in the extension set expression. | ||

| + | |||

| + | {{TODO|section being worked on}} | ||

| + | ;{{class|infixParser}}: inherits from {{class|Parser}}. Its {{ident|parse}} function is a ''led'' parser and implements something like (pseudocode): | ||

| + | <blockquote><code><pre> | ||

| + | parse(AST left) | ||

| + | ast = new AST() | ||

| + | ast.first = left | ||

| + | verify_expected_group(ast.first, this.expected_non_terminals[0]) | ||

| + | ast.second = this.parsers[0].parse(ast, is_right_associative=False) | ||

| + | verify_expected_group(ast.second, this.expected_non_terminals[1]) | ||

| + | return ast | ||

| + | </pre></code></blockquote> | ||

| + | |||

| + | |||

| + | ;{{class|infixBuilder}}: inherits from {{class|ParserBuilder}} and has the following methods: | ||

| + | :*<code>build(Lexem lexem, GroupID gid, Array<Parser> parsers, Array<NonTermGroups> expected_non_terminals)</code> which implements something like: | ||

| + | <blockquote><code><pre> | ||

| + | if lexem in KnownSymbols: | ||

| + | else: | ||

| + | </pre></code></blockquote> | ||

| + | {{TODO}} | ||

| + | |||

| + | === Open Questions === | ||

| + | How does the user specify the expected AST ? | ||

| + | : Some examples in the existing parser: | ||

| + | :* some binary infix predicates are in fact parsed as n-ary operators (with more than 2 childrens if possible). Shall we generalize this feature ? How will this fit within the proposed extension scheme ? | ||

| + | :* bound identifiers are converted to ''de Bruijn'' indexes while constructing the AST. Is this desirable ? How does the user specify that identifier are bound or not ? | ||

| + | |||

| + | === Sample Implementation === | ||

| + | A prototype as been developed in Python to quickly try different solutions. | ||

| + | |||

| + | The development tree of the python prototype is available at http://bitbucket.org/matclab/eventb_pratt_parser/ | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | [[Category:Design Proposal]] | ||

Revision as of 17:45, 3 February 2010

This page describes the requirements for a generic parser for the Event-B Mathematical Language.

A first design proposal is also drafted.

Requirements

In order to be usable mathematical extensions require that the event-b mathematical language syntax can be extended by the final user.

The lexical analyser and the syntaxic parser thus have to be extensible in a simple enough way (from a user point of vue).

Requirements Exported by the Current Language Design

Operator Priority

- operator are defined by group,

- group of operator have a defined precedences,

- there may be precedences defined inside groups.

Operator Compatibility

- a compatibility table defines allowed associativities inside a group,

- a compatibility table defines allowed associativities between groups (it allows to forbid a syntaxic construction like

- nota: this requirement was added afterwards with consistency in mind.

Expected Extension Schemes

We do want to at least define operators of the following form :

- infix :

or

or

- prefix :

- postfix :

- closed :

- parentheses sugar :

- functional postfix :

- functional prefix :

- lambda like :

- Union like :

or

or

- sets :

or

or  or

or

We also like to define precisely precedences and associativity between existing and new operators.

Requirements exported by the dynamic feature

- the precedence should not be enumerated but defined by a relation, like: '+' < '*' and '*' < '**', ...

Limitations

Design Alternatives

Make Existing Parser Extensible

The existing parser is a LL recursive descent parser generated by the Coco/R Compiler Generator, which makes extensive use of pre-computed lookahead, which makes it very difficult to be transformed in a sufficiently enough generic parser.

Parser Combinator

- on wikipedia. Some implementations are referenced here.

- with functionnal language Agda : Parsing Mixfix Operators

- This paper is interesting in its proposal of using an acyclic graph to define operator precedence.

- with functional language OPAL : How To Obtain Powerful Parsers That Are Elegant and Practical

Pratt Parser

Note that a pratt parser is essentially a parser for expressions (where expression parts are themselves expressions)... But it may be tweaked to limit the accepted sub-expressions or to enforce some non-expression parts inside an expression. More on that hereafter.

Some Existing Extensible Languages

- Katahdin, and the associated paper A Programming Language Where the Syntax and Semantics Are Mutable at Runtime which describes the language and its implementation (in C#).

- XMF whose implementation seems not be describes in details.

- XLR: Extensible Language and Runtime, which looks like a pratt parser according to the sources.

whose implementation seems not be describes in details (but sources availables).

whose implementation seems not be describes in details (but sources availables).

Design Proposal

Main Concepts

- symbol

- a symbol is a lexem known by the parser.

- group

- each symbol belongs to one and only one group.

- symbol compatibility

- a relation telling if inside a group two symbol are compatibles.

- Two symbol are compatibles is they can be parsed without parentheses (see Event-B Mathematical Language for more on compatibility).

- group compatibility

- a relation telling if two groups are compatibles.

- Two symbol of different groups are said compatibles if their group are compatibles.

- symbol associativity

- a relation which gives the associative precedence of symbols inside a given group.

- group associativity

- a relation which gives the associative precedence of groups.

- Two symbol of different groups have a relative precedence given by the associative precedence of their groups.

- generic parser

- the parser which is defined.

- This parser may be called recursively (an infix expression being build upon a left and right sub-expression) and is often the default parser used when building the AST associated with a symbol.

- specific parser

- some specific parser, predefined as helper functions to build specific AST.

- Some example of specific parser are the one used to parse and build list of identifiers (for quantified predicates), mapplets lists (for lambda), and so one...

Proposed User Interface

The hereafter proposition does not preclude the final UI to take other (more user friendly forms). But the concepts should probably stay the same

We propose that the user will be able to create several predefined type of symbols (new types may certainly be further added by mean of eclipse extension points). When defining a symbol, the user can choose the parser used to parse the sub-expressions (default to generic parser, but a specific parser may be used) and can enforce the form of the resulting parsed sub-expression in terms of their AST root type.

Predefined Symbol Builders

The proposed predefined symbol builders are:

- infix

- For example predicate conjunction which expects two predicates for left and right parts may be defined by

infix("∧", gid="(logic pred)", expected_nonterm=["predicate", "predicate"]) - As another example, substitution ≔ of group '(infix subst)' which expect two ident list for left and right expressions may be defined by

infix(symbol="≔", gid="(infix subst)", parsers=["ident_list", "ident_list"], expected_nonterm=["ident_list", "ident_list"])

- prefix

prefix("¬", "(not pred)", expected_nonterm=["predicate"])

- atomic

atomic("⊤", "(atomic pred)")

- prefix closed

- used to build prefix symbol which must be followed by enclosing symbol(brackets, parentheses,..). For example finite may be defined by:

prefix_closed("finite", "(", ")","(atomic pred)", expected_nonterm=["expression"])

- postfix:

postfix("∼", gid="(unary relation)", expected_nonterm=["expression"])

- quantified

quantified("∀", "·", expected_nonterm=["predicate"]

- lambda like

lambda_like("λ", "·", u"∣", gid="(quantification)", expected_nonterm=["ident list", "predicate", "expression"])

- quantified union like

Union_like("⋃", "·", "∣", gid="(quantification)")

- closed sugar

- use for parentheses added to enforce associativity:

closed_sugar("(", ")")

- functional postfix

functional_postfix("(", ")", gid="(functional)", expected_nonterm=["expression", "expression"])

Some right associative versions of those symbol builders may also be supplied (infix_right, or a specific parameter right associative parameter may also be provided to the infix symbol builder).

Predefined Specific Parser

- expression lists

- ident lists

- maplet tree (for lambda)

Defining Compatibility and Precedences

Inside a group, compatibility may be defined like:

g = group["(binop)"]

g.add_many_compatibility(

[ ("∪", "∪"),

("∩", "∩"),

("∩", "∖"),

("∩", "▷"),

("∩", "⩥"),

("∘", "∘"),

(";", ";"),

(";", "▷"),

(";", "⩥"),

("", ""),

("◁", "∩"),

("◁", "∖"),

("◁", ";"),

("◁", "⊗"),

("◁", "▷"),

("◁", "⩥"),

("⩥", "∩"),

("⩥", "∖"),

("⩥", ";"),

("⩥", "⊗"),

("⩥", "▷"),

("⩥", "⩥"),

("×", "×"), ]

which defines the compatibility table for expression binary operator as defined in Event-B Mathematical Language.

Inside a group, precedence may be defined like:

g = group["(arithmetic)"]

g.add_many_precedence(

[ ("^", "÷"),

("^", "∗"),

("^", "+"),

("^", "−"),

("^", "mod"),

("+", "∗"),

("−", "∗"),

("+", "÷"),

("−", "÷"),

("+", "mod"),

("−", "mod"), ] )

which defines ^ as having the least precedence amongst the operators of the expression arithmetic group.

Similarly, precedences and compatibilities between groups may be defined :

group_precedence.add(("(binop)", "(interval)"))

group_precedence.add(("(interval)", "(arithmetic)"))

group_precedence.add(("(arithmetic)", "(unary relation)"))

group_precedence.add(("(unary relation)", "(bound unary)"))

group_precedence.add(( "(bound unary)", "(bool)"))

group_compatibility.add_all( [ "(binop)", "(interval)", (arithmetic)", "(unary relation)", "(unary relation)", "(bound unary)",....])

Some Difficulties

Some difficulties appear when trying to implement an Event-B parser with the preceding scheme:

- A symbol may be declared by several different builders (think of unary and infix minus for example).

- Pratt parsers are able to cope with a symbol appearing either at the beginning of an expression or inside an expression (see led and nud hereafter). For example, unary minus and infix minus can be both defined by the proposed scheme. However, in their default form, pratt parser failed in parsing symbol like '{' which appears at the beginning of extension sets, comprehension sets and quantified sets.

- SOLUTION #1: The existing Event-B parser uses an infinite lookahead to determine which one of those three sets is actually being parsed.

- This solution hinders the extensibility of the parser, because the addition of a symbol requires to modify the lookahead.

- However, such an issue may be bypassed, at least in a first version, in enforcing some usage rules. Thus, a new symbol contributed by an extension but used in the lookahead may be rejected as in invalid symbol.

- SOLUTION #2: Implementing a backtracking parser.

- More precisely, it means that the three sets are attempted to be parsed. The parsing is successful if and only if one of these attempts lead to a result (i.e., it is a failure if several attempts or none lead to a result).

- It is a good solution provided that we use memorisation to alleviate the cost of parsing formulas that require heavy backtracking.

- Defining a grammar or a grammar extension is not an easy job, even with those simple mechanisms.

- It would certainly be a good idea to implement a user interface to test the grammar, so that an extension designer has a way to ensure that he agrees with the parser on the semantic of his extension (or at least on the AST obtained by using it). For example, the representation of the syntax tree returned by Formula.getSyntaxTree could be compared to an expected entered string.

Core Algorithm

Main Objects

- KnownSymbols

- a table associating a SymbolParser for each known symbol.

- SymbolParser

- a Parser for a specific symbol. It should at least have the following attributes:

- Array<Parser> ledfor parsing the expression associated to the symbolidwhen it appears in the middle of an expression (left denotation)

- Array<Parser> nudfor parsing the expression associated to the symbolidwhen it appears at the beginning of an expression (null denotation)

- String id

- String giddesigning the group to which theidsymbol belongs to.

- Array<Parser>

- Note that parsers in led (respectively nud) will be tried one after another until one match (with backtracking to the original state in between).

- Parser

- A parser, which should have the following attributes

- Parser Array subparsersan array of subparsers used by theparsemethod. They defaults toMainParser, but may be set to specific parsers.

- Parser Array

- and the following methods:

- parse: this method should consume tokens and returns an AST. It may use severalsubparsers.

- ParserBuilder

- the class that inherited from this class provide the interface used to create new symbols. It has the following method:

- build: which build a SymbolParser and store it in the KnownSymbols container as being associated to the symbol for which the parser is being built.

- MainParser

- This class inherits from Parser and its parsefunction implements the core of the pratt algorithm.

- Without taking into account backtracking, the algorithm reads:

def parse(reckognized_token, has_right_associativity=False):

global token

t = token # t is the processed symbol

token = tokenizer.next() # token is the next symbol

left = t.nud() # a new expression is being parsed (null denotation : nothing on the left)

while has_less_priority(reckognized_token, token, has_right_associativity):

t = token

token = next_token() # if precedence allows it, we parse next expression

left = t.led(left) # has being left expression of current symbol (left denotation)

return left

- The parsing of a new expression is initiated by:

token = tokenizer.next()

ast = MainParser.parse(KnownSymbols["(eof)"])

- where the tokenizerreturns the SymbolParser associated to the next lexem.

- identListParser

- inherits from Parser. Its parsefunction implements the parsing of an ident list (like

).

). - identMapletListParser

- inherits from Parser . Its parsefunction implements the parsing of a maplet list as used in lambda expression (like

).

). - expressionListParser

- inherits from Parser . Its parsefunction implements a list of expression as used in the extension set expression.

TODO: section being worked on

- infixParser

- inherits from Parser. Its parsefunction is a led parser and implements something like (pseudocode):

parse(AST left)

ast = new AST()

ast.first = left

verify_expected_group(ast.first, this.expected_non_terminals[0])

ast.second = this.parsers[0].parse(ast, is_right_associative=False)

verify_expected_group(ast.second, this.expected_non_terminals[1])

return ast

- infixBuilder

- inherits from ParserBuilder and has the following methods:

build(Lexem lexem, GroupID gid, Array<Parser> parsers, Array<NonTermGroups> expected_non_terminals)which implements something like:

if lexem in KnownSymbols:

else:

TODO

Open Questions

How does the user specify the expected AST ?

- Some examples in the existing parser:

- some binary infix predicates are in fact parsed as n-ary operators (with more than 2 childrens if possible). Shall we generalize this feature ? How will this fit within the proposed extension scheme ?

- bound identifiers are converted to de Bruijn indexes while constructing the AST. Is this desirable ? How does the user specify that identifier are bound or not ?

Sample Implementation

A prototype as been developed in Python to quickly try different solutions.

The development tree of the python prototype is available at http://bitbucket.org/matclab/eventb_pratt_parser/